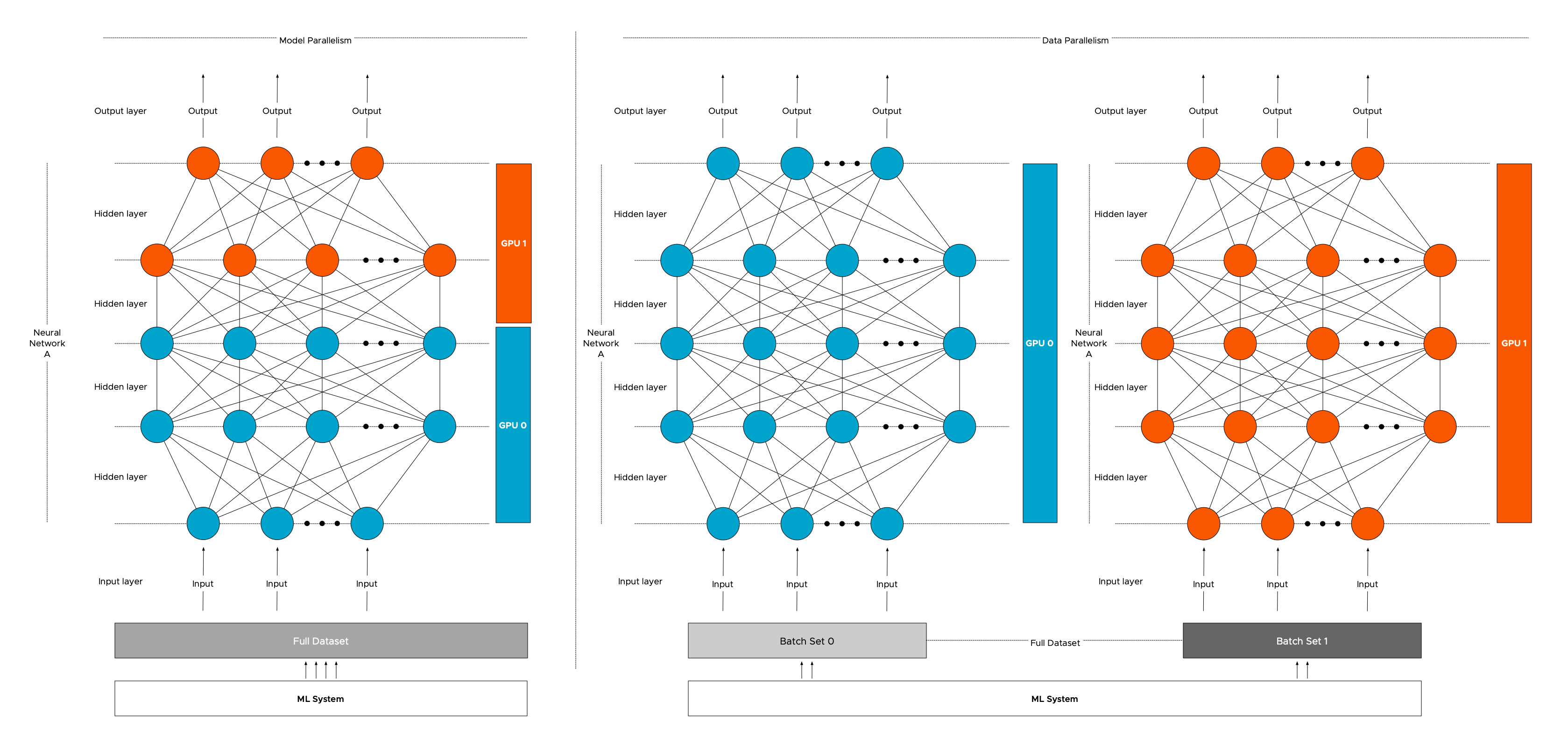

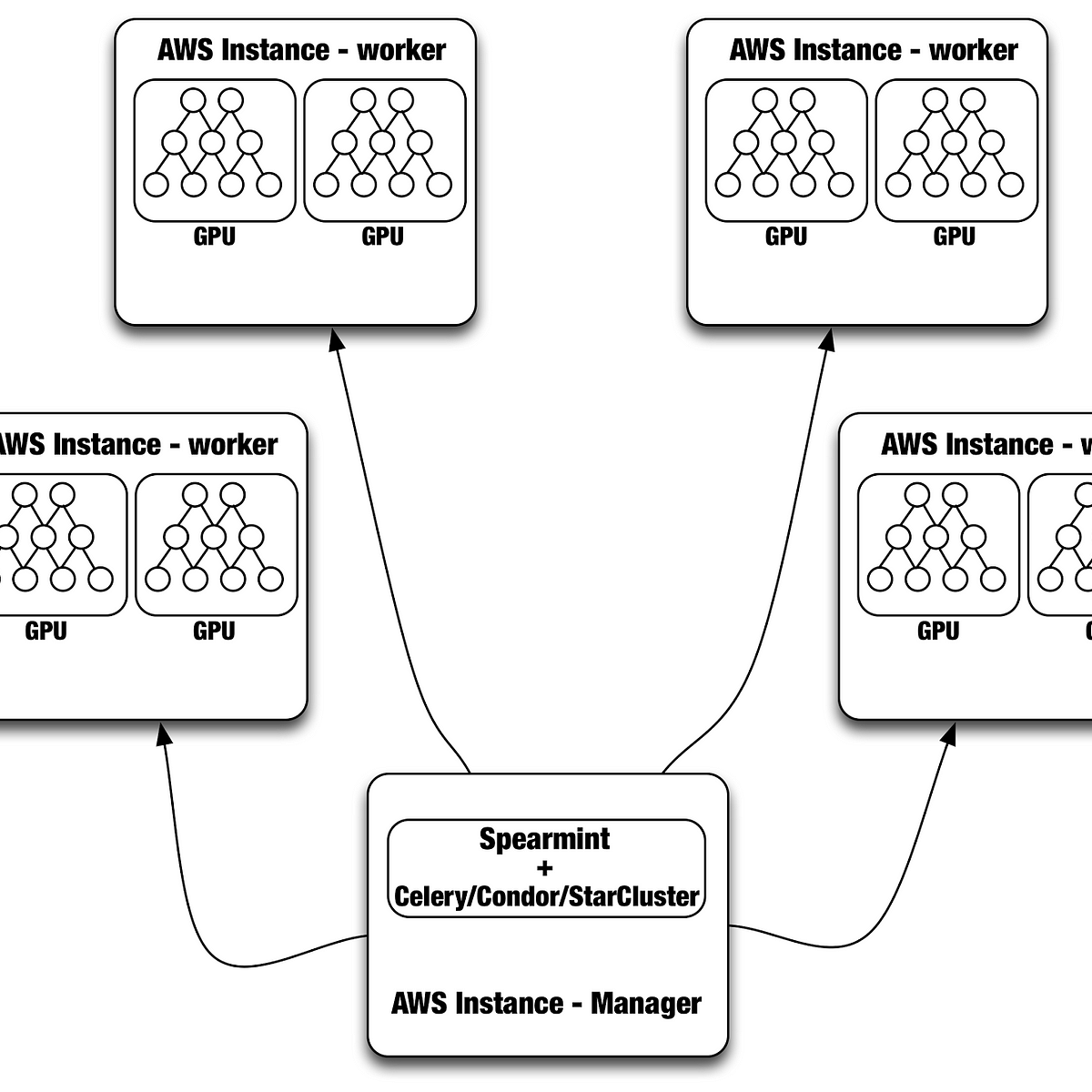

Distributed Neural Networks with GPUs in the AWS Cloud | by Netflix Technology Blog | Netflix TechBlog

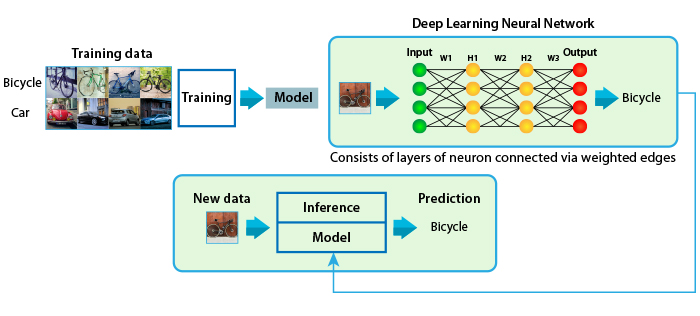

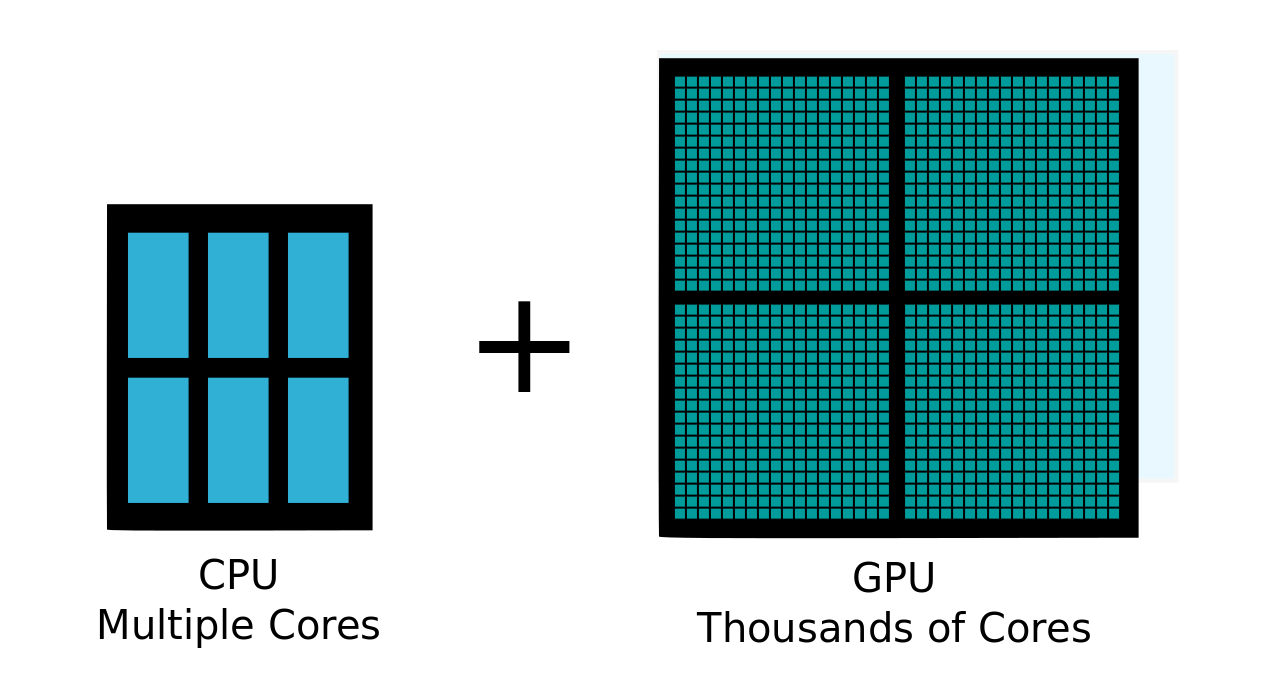

FPGA-based neural network software gives GPUs competition for raw inference speed | Vision Systems Design

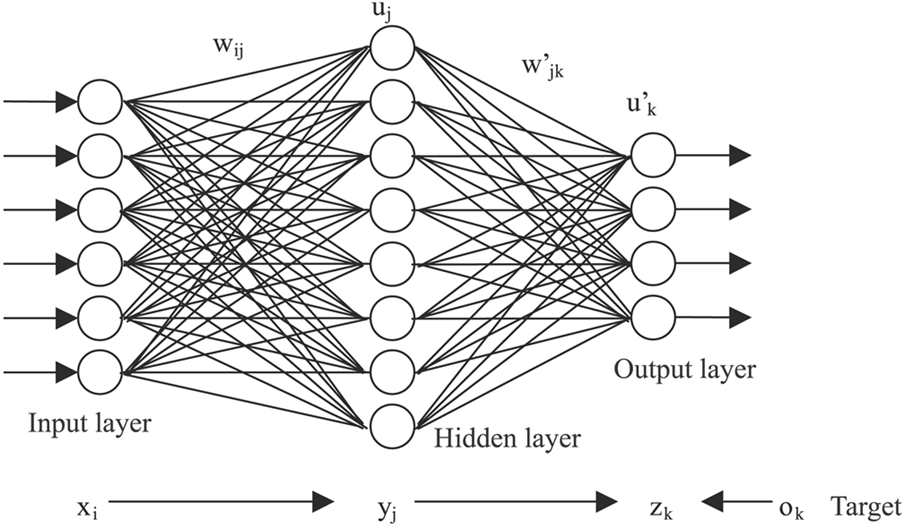

Make Your Own Neural Network: Learning MNIST with GPU Acceleration - A Step by Step PyTorch Tutorial

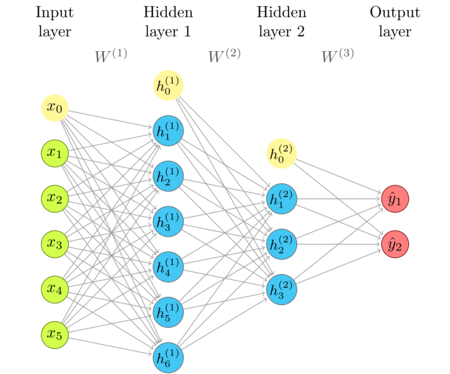

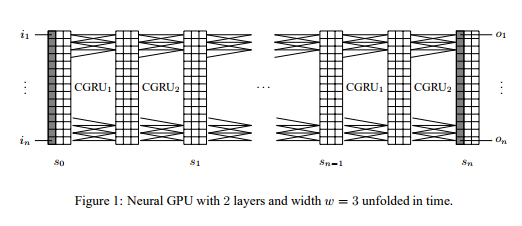

![PDF] Extensions and Limitations of the Neural GPU | Semantic Scholar PDF] Extensions and Limitations of the Neural GPU | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/0bb487b9cfefd56e79be0a5be5f1e05742683301/3-Figure1-1.png)

![PDF] Neural GPUs Learn Algorithms | Semantic Scholar PDF] Neural GPUs Learn Algorithms | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/5e4eb58d5b47ac1c73f4cf189497170e75ae6237/4-Figure1-1.png)